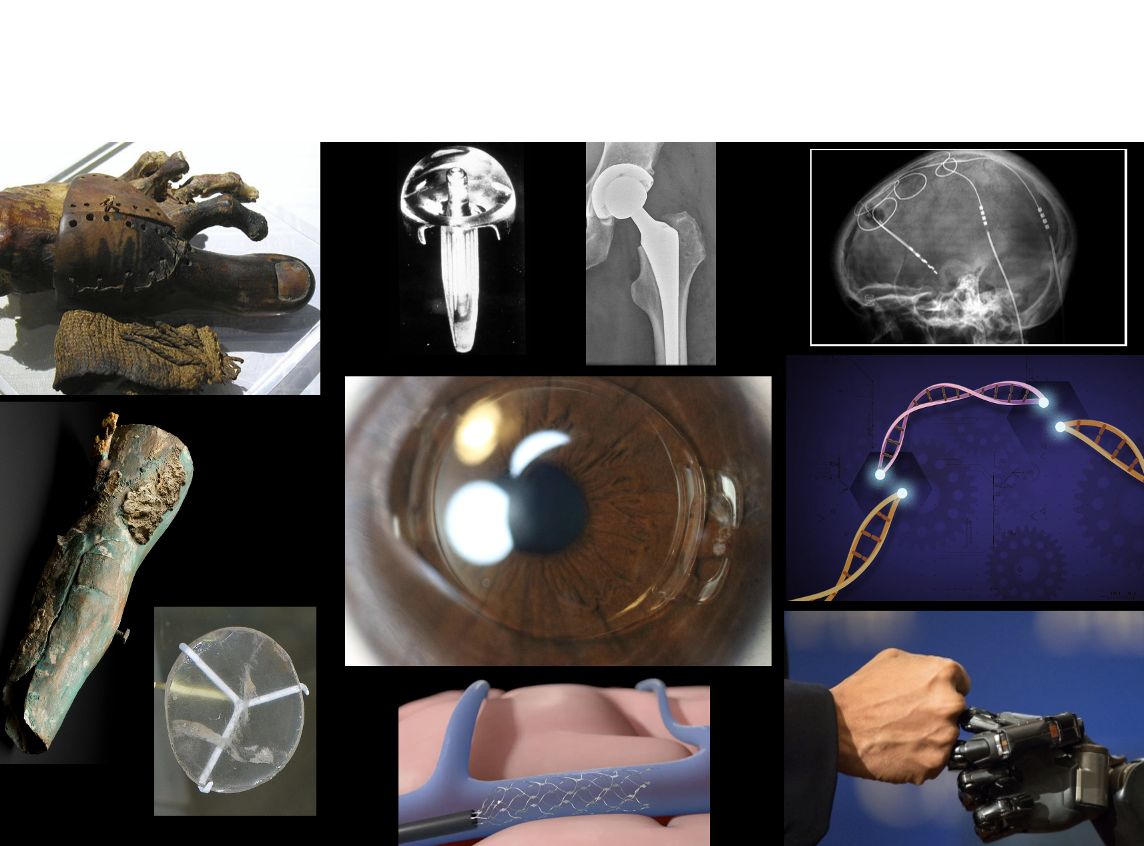

You’re in Thebes in 1,000 BCE, striding through a crowded plaza toward a temple. Passersby sneak glances at your new big toe. Gorgeously crafted from wood and leather, the faux toe lets you balance and move naturally: your body feels whole.

Suddenly, a time traveler from 2023 appears as you enter the temple portico.

She has a pacemaker, artificial lenses in her eyes, titanium hips, patches on her torso with a glucose monitor and an insulin pump, probes in her brain to control tremors, and peers at you through augmented reality glasses with embedded cameras, speakers, and microphones. “Hi. I’m you 3,000 years from now,” says the time traveler. Believing you behold the temple’s god, you have a massive heart attack and die.

“Wow. Glad I have a cobalt-chromium stent in my coronary artery,” the time traveler remarks.

We have enhanced and repaired our bodies with the best technology available throughout recorded history. Making and using tools is fundamental to human culture, and so is physical modification. Today’s augmentations seemed like science fiction just 100 years ago. In the future, we will boost how our bodies interact with the world and how our minds perceive reality.

Let’s look at the history of human enhancement and the huge opportunities and challenges ahead.

Wood, Leather, Bronze, and Stone

The wood and leather toe from 1050 BCE Thebes is called the “Cairo Toe.” Craftsmen-turned-orthopedists to well-born clients used the day’s technology to replace a high priest’s daughter’s missing digit.

Public Domain

700 years later, Roman legions specialized in conquering lands and killing people. Roman soldiers were often maimed in the process: artificial legs were a bleak prospect for legionnaires. The Capua Leg from about 300 BCE, when Rome had finished conquering most of the Italian peninsula, has a hollow wood core clad in bronze. The bronze sheath resembles Roman shin armor showing both military tech transfer and the long history of soldiers’ suffering.

CC BY 4.0

Self-enhancements advance with technology. Take reading glasses: we’ve always wanted less squinting and more seeing. The Nimrud Lens is a 3,000-year-old ground and polished clear quartz disc from Assyria that acts as a 3X magnifying glass.

Reading stones, magnifiers laid on top of text, were fashioned in al-Andalus in the 800s by polymath Abbas ibn Firnas. Ibn Firnas also created a process for making clear glass, a crucial step in reducing costs and increasing the availability of vision aids. Reading stones are still popular desk toys; I procrastinate plenty with mine.

Source: Hedwig Storch

CCA-SA 3.0

So Now We Have Glass, Let’s See…

Quarrying, grinding, and polishing crystals is expensive. Quartz is heavy. Mass-produced clear glass was the solution for popularizing glasses. The business environment in Venice and Murano in the 13th Century was perfect for thriving glass manufacturing. Demand for fine glassware for religious art like stained glass windows, and wares for wealthy merchant families spurred investment and attracted talented artisans.

Friar Salvino D’Armate wanted to help aging colleagues continue reading. Optics knowledge from Islamic scholars fused with refined clear glass-making techniques enabled the friar to invent eyeglasses in 1284. Venetian entrepreneurs raced into the new market and started the first Eyeglasses Guild.

It’s Electrifying

Inventors took augmentation to new levels centuries later by generating and controlling electricity. In the 1870s, personal electronics sprang from garages and labs worldwide. Money flowed at a stunning list of new devices like it does toward AI startups today:

- 1876: Telephones

- 1877: Phonographs

- 1877: Microphones

- 1879: Light bulbs

We opened the innovation throttle by harnessing and miniaturizing light and sound. Miller Reese Hutchison, an Alabama Medical College student, wanted to help a friend with deafness. He designed the first electric human enhancement, a hearing aid, in 1898. Hutchison’s “akouphon” was a bulky tabletop system plugged into a wall socket. Then, as now, electronics’ size, power, and batteries swiftly improved. A portable design, the “Acousticon,” launched in 1905.

Replacing Parts inside the Body

At the end of the 19th Century, tech’s forward charge began revolutionizing orthopedics. In 1891 Professor Themistocles Glück, a renowned orthopedic surgeon, presented using ivory as a replacement material for femoral heads in patients with hip joints destroyed by turbuculosis. Ivory wasn’t high-tech, but replacing a crucial internal body part was audacious.

Inventors and surgeons have since experimented with a multitude of artificial hip materials including glass, ceramic, Bakelite, acrylics and other plastics, stainless steel, titanium, and cobalt-chromium alloys.

CC BY-SA 4.0

Spurred by the horrific world wars, investment and innovation accelerated in electronics, materials science, and computation. The advances were heavily leveraged human enhancement. New creations, like artificial lenses, would replace or augment poorly-functioning parts inside the body.

It is common for our eyes to become cloudy with age to get cataracts. Nearly a quarter of all senior citizens in the United States have cataracts, and the impacts can be debilitating. Sufferers may trip frequently or become unable to drive. Cataracts cause half of all blindness worldwide.

CC BY-SA 3.0

In 1949, human lenses clouded by cataracts were replaced for the first time with artificial intraocular lenses or IOLs. Made from perfectly clear polymers, IOLs are finely crafted to shape light onto the retina. By the early 1990s, these artificial lenses one-upped natural biology by incorporating anti-UV ray coatings to protect the retina from sun damage.

By: Isaac K

CCA-SA 4.0

The numbers for cataract surgery are stunning. Half a billion lens replacements have happened since 1995 alone, and 28 million now occur annually.

A Can of Kiwi Shoe Polish

A beating heart is the feeling and sound of life. A heart not beating with the right rhythm is potentially deadly. In 1958 in Stockholm, 43-year-old Arne Larsson was not supposed to live. He collapsed over 20 times daily due to a low heart rate. On October 8, operating late in the evening to avoid prying eyes, pioneering surgeon Ake Senning leveraged the recently-invented transistor, repurposed inventions from MIT’s Radiation Lab and WWII field telephones, a Popular Electronics do-it-yourself amplifier design, a new acrylic glue, and a Kiwi Shoe Polish can as a mold, and implanted an artificial pacemaker in Larrson’s chest. Larsson lived to be 86.

Micromachines Inside Our Body

Microelectronics, bio-compatible materials, and growing knowledge of how our brain understands electric signals as sound led to the cochlear implant in 1961. A pivotal development in helping people with deafness to hear, the implant transmits signals representing sound to the brain.

A speaker on the patient’s head sends sound to a battery-operated audio-processing computer. A fine wire from the computer coils around the cochlea, a hollow spiral-shaped bone inside the ear. Electric patterns stimulate auditory nerve fibers traveling from the cochlea to the brain, where the signals are recognized as sound.

Humans have suffered from diabetes throughout recorded history. Since the 1920s, the disease has been commonly treated by controlling blood sugar with synthetic insulin. For decades correctly timing, dosing, and delivering insulin required finger pricks, blood samples, and syringe injections. It is a clumsy process with plenty of opportunities for mistakes.

Advances in batteries, low-power electronics, and miniature pumps enabled Dean Kamen to create a wearable insulin pump in 1976. His invention, the “autosyringe,” automatically injected insulin at programmed intervals.

The words on the system are not upside-down to the user, who looks down from above.

(Retrieved from: https://www.researchgate.net/figure/The-AutoSyringe-device-for-delivering-insulin-shown-here-worn-by-AP-photographer-Patrick_fig3_351661980)

The late 20th Century saw a paradigm shift in miniaturized sensors. Accurate sensors for chemicals, fluid flows, temperature, pressure, electric fields, and more shrank to remarkable dimensions. Compact, robust, extremely low-power glucose sensors led to the first FDA-approved wearable continuous glucose monitor in 1999.

Today, tens of thousands of persons with diabetes are like our time traveler; they wear small patches on their torso, connecting their bodies with liberating treatments for their disease.

Coronary artery disease, where plaque build-up closes off the heart’s blood pathways, is a killer. Stents prop open those arteries so life-sustaining blood can flow. These micro-mechanical miracles are complex, cylindrically shaped hollow structures. They incorporate cobalt-chromium and tantalum initially used in turbine engines, aircraft parts, and electronic components. The first coronary artery stent was implanted in a patient in France in 1986; more than two million people receive this life-saving treatment annually.

From Heart to Brain

We feel life in our heartbeat, and we feel our heartbeat with our brain. (Cue up Pink Floyd’s “Eclipse.”) Everything we sense, ideas, emotions, memories, and hopes dwell in our most complex and least understood organ. Whereas the cochlear implant connects to a nervous pathway to the brain, Deep Brain Stimulation (DBS) electrodes are inserted through the skull and sunk far into delicate tissues.

Today the system treats Parkinson’s Disease, epilepsy, and even obsessive-compulsive disorder. The first DBS, implanted by a team in Grenoble in 1986, leveraged probes and electronics from the wearable cardiac pacemaker.

The brain controls our body’s experience with the world around us. When a connection with our limbs or senses is severed, we lose interaction with our environment. Connecting the brain to machines working with and around motionless limbs or sightless eyes can bring people with disabilities back into the physical world. Researchers and entrepreneurs are linking brains with severed nerves and computers using brain probes, miniature motors, and chips more powerful than the supercomputers of just 25 years ago.

Elon Musk and his company, Neuralink, have brought panache and fanfare to brain-computer interfaces or BCIs, but they are not the field’s only competitors. BrainGate is a neural implant system where 100 impossibly thin implanted electrodes catch faint signals among neurons.

Another competitor, Synchron, has conducted human trials of their inter-brain sensor array since 2021. Like many innovators, Synchron engineers have repurposed existing tech. This is the “Stentrode:” does it look familiar?

Yep: a stent. Made of a nickel-titanium alloy used in coronary artery stents, the Stentrode adds a twist: 16 or more embedded sensors capturing chatter among neurons. It’s like a radio antenna inside your head.

This antenna is not technically in your brain. It’s propped-up in blood vessels running through your brain. Synchron has a cool video showing the Stentrode in action.

Capturing the brain’s noise is only helpful if you can understand it. A crucial part of BCIs is making sense of the brain’s 100 billion signals per second. That takes serious computing and specialized software. Filtering and matching patterns, Synchron’s system is like a microphone listening to every human conversation on earth and hearing a small group chanting, “Press my right index finger,” whenever the patient thinks about making a mouse click.

Here’s a thought exercise. Suppose a BCI catches and decodes brain signals telling the vocal cords and mouth to make words. Suppose you also have easy access to a large language model (LLM), like OpenAI’s ChatGPT, to accurately predict words to continue sentences. What might you do?

In January 2023, a Stanford research team used a brain signal sensor (BrainGate), signal processing, and an AI word-prediction program similar to ChatGPT to enable a woman with ALS to speak to a computer at 62 words per minute. People without speech deficits typically talk at about 160 words a minute: the woman has transformed from mute to having hope for everyday speech.

Take the thought exercise another step. Computers, like the one in your smartphone, are phenomenal general-purpose portals to a universe of activities. They can cruise the internet and control all sorts of machines, including stuff in your home (“Hey Google, turn down the lights and play Sam Cook.”). What if you could link your brain directly to your phone?

How about bypassing a spinal cord injury to control paralyzed legs?

Or shape and thrust a robotic hand to fist-bump Barack Obama?

Turn the communication around: how about piping computer-generated images into your brain? In November 2022, Neuralink demonstrated sending information directly into a monkey’s visual cortex. The animal “saw” what the computer created; no screen or eyes needed. The artificial lens business won’t be disintermediated any time soon by brain implants piping whatever world you want directly into your mind, but the crazy reality is the early tech building blocks exist.

In his final book, “Brief Answers to the Big Questions,” Stephen Hawking wrote, “I believe the future of communication is brain-computer interfaces.” Heavyweights who invest in the future are piling in. Bill Gates and Jeff Bezos are Synchron investors, and Musk has attracted almost $400M of venture money into Neuralink.

For persons with physical disabilities, these inventions are life-changing. Think how much we rely on interfacing with a computer: our work, information, entertainment, medical care, and fellowship increasingly flow through keyboards, finger taps, and voice commands. A person detached from those movements is disconnected from broad swaths of society.

The Powerful Current Under Our Surface

Our genetic makeup is our body’s blueprint. While prosthetics and BCIs extend us to better interact with the world, gene editing flows deeper. It changes our very building blocks. Our innate curiosity and aspirations draw us toward experimenting with reprogramming the human code.

Genetic Editing’s Model-T

Gene editing to treat disease and alter traits has been a tantalizing goal since at least the 1950s’ discovery of DNA’s double-helix structure. Predictably, as soon as hyper-curious DNA sleuths had the computational power, they decoded human DNA. By 2003 the Human Genome Project listed every gene in the human genome.

Once we had the detailed architectural drawings the next question was, “Hey, how do we fix the disease Mother Nature designed into this guy on Floor 29?”

In this century, new technology radically altered the opportunities and risks. Gene editing has been around for decades but a new tool known as “CRISPR” has changed a twenty-million-dollar lab project into a home kit available on Amazon.

(“CRISPR” stands for, get ready – it’s a beast: “Clustered Regularly Interspaced Short Palindromic Repeats.” And people accuse Silicon Valley techies of slinging opaque jargon…).

In 2015, bioethicist Hank Greely of Stanford University compared CRISPR to the Model T Ford. It isn’t the first gene editing tool, but it may transform society through radical cost, availability, and dependability advantages.

Debilitating disorders caused by genetic anomalies can’t be cured by adding new tech to our physiology. In Sickle-cell disease, for example, red blood cells get shaped like crescents instead of disks because of a fault in their hemoglobin, the oxygen-binding protein.

The sickle-shaped cells cause anemia, infections, and strokes. Sickle-cell disease is much more prominent among Black and African-Americans, where one out of every 360 babies are born with the disorder.

Amazingly, editing a person’s hemoglobin blueprint can stop the disease by reprogramming the body to make disc-shaped red blood cells. Editing either fixes the faulty hemoglobin gene or activates a different, healthy hemoglobin gene. It’s like re-writing a defective line of code in a software program. Only this computer program’s job is growing human blood. (There’s a vampire movie plot in here somewhere.)

You’re probably asking, “Wait a second. We can dial-in features like blue eyes before a baby is born?” Yep, and it’s been done. Genetically altered human twins, Lulu and Nana, edited as embryos to be immune to HIV, were born in China in 2018.

The scientist responsible for Lulu and Nana’s modification, He Jiankui, said at the time he acted independently and with only the best intentions for the girls and their family. Today, after thinking it over in prison for three years, He says he acted too soon. Among the enormous problems: even the latest editing tools make mistakes, and even precise edits can have unpredictable side effects. Uh oh.

Genetics researchers are acutely aware of “off-target effects,” their language for editing something more or different from what you planned. They are also worried by the massive, murky complexity of what else a gene might do. Sure, He Jankui made a genetic change to provide HIV immunity – but what are the downstream effects?

Another conundrum is the fuzzy cooperation between nature and nurture: our genes are not the only factor in shaping who we are. Epigenetics plays a massive role in how each human’s genes organize in reaction to his/her environment.

Epigenetics is like a set of switches turning genes on or off. If our DNA is a blueprint, epigenetics are the instructions telling our cells (the builders) which parts of the blueprint to use. The instructions change depending on factors like environment, diet, and lifestyle. For example, a woman’s diet during pregnancy will significantly impact her child’s risk of obesity.

Enhancement for Life’s Sake

Human augmentation’s long history shows a couple of key patterns.

First, inventors created enhancements because they were passionate about relieving human pain and suffering. They were not playing in nature’s sandbox (“Hey, let’s see what happens when we change this part!”) or on a transhumanist spiritual quest.

Second, doctors and engineers eagerly experimented with the latest technologies. Their relentless drive to treat severe disorders led to tinkering with materials and tools from wood to bronze sheeting to art glass, telephones, jet engines, and artificial neural networks, all to find the perfect combination of feasibility and functionality.

We Are at a Turning Point

We are early in the BCI, AI, and genetic editing timelines. Yet, a woman speaks through a brain implant at near-typical human speed. Formerly incurable genetic diseases are falling. Human babies have been born with designer features. What happens when we can enhance ourselves beyond natural human ability? Tech springs from our own creativity, so what does “natural ability” even mean?

Good readers can process around 300 words per minute; listening is a bit slower. There is scant research pinpointing the maximum rate at which our brain can produce language but make the simple wild-ass guess (SWAG) of around 300 words per minute, the same as reading and twice as fast as we usually speak. Would people without speech impairments endure brain implantation to get accelerated computer and internet speeds?

If an implanted BCI lets you interact 5X faster with your phone and the internet, would you want it? How about 10X? What risks would you be willing to take? How much would you pay?

If a BCI is massively expensive but offers the user a significant economic advantage, should we regulate access out of fairness? And if BCI implants are strongly regulated, what happens when a BCI implant clinic opens in a rogue nation? Would recipients be barred from coming back to their own country?

Genetically editing humans raises even bigger questions. Should we allow editing embryos’ code for skin tone, eye color, hair color, muscle mass, or adult butt shape?

Some genetic edits can be passed down to later generations. Gee, what could possibly go wrong?

International bodies are wrestling with these huge questions but race against democratized and widely accessible tools and techniques.

The Forward View is Fuzzy

Those questions sound like the stuff of science fiction, so it’s critical to sort pseudo-science from realistic scenarios. Here are some core things we know:

- Genetics and genetic editing are frightfully complicated (and deserve their own essay, or two or dozen). We still don’t know the relative impact of nature vs. nurture or the possible unintended effects of complex gene interactions.

- BCI systems like Synchron’s, BrainGate, and Neuralink enable humans to use computers using only their thoughts.

- The interaction includes natural language input, bypassing severed nerves to move limbs, and controlling robotics.

- The communication channel works both ways: systems can send information directly into the brain, bypassing our body’s senses.

- Improvements in signal processing, language prediction (AI including LLMs), motors, sensors, and electronics will progressively boost BCI recipients’ capabilities to unpredictable levels.

It is tough to project where human enhancement is going. Let’s focus on BCI to illustrate.

There are profound unknowns regarding the brain and cognition. In the words of respected neurosurgeon and author Dr. Henry Marsh, “Everything we are thinking and doing is generated by the activity in our brain, but we really don’t know how.”

There are 86 billion neurons in the human brain with myriad interconnections. Today we can monitor around 1,024 – the number of sensors in a Neuralink array. We can see patterns, like looking at a painting through a sheet of paper with small holes punched in it, but details are elusive.

For example, we don’t use language for all thoughts, so what are thoughts? Humans can count, add, and subtract three objects long before understanding language: how? Some see number sequences as visual patterns, like months being arranged in a circle: huh? Feeling love is not a string of words in our heads. Using language to interface with computers may be wildly inefficient, but what other medium do we have?

An often-asked question is how soon we will measure all of the brain’s neurons and their interactions, or at least enough to fully interface a brain with a computer at the highest biologically possible speed.

Some speculators simplistically assume the number of sensors in a brain probe will double every two years, like Moore’s Law for semiconductors. Starting with 1,024 sensors today, it would take between 17 and 18 doublings to reach 86 billion, so “conservatively,” 18 doublings or 36 years.

I don’t think so.

Not So Fast! Paul Allen and Complexity Brakes

Microsoft co-founder, the late Paul Allen, and Mark Greaves wrote about “complexity brakes,” unavoidable factors delaying technology progress. One is most technologies, unlike semiconductors, don’t double in capability every two years. Another is the more we learn about the brain, the more complicated it becomes to understand it. The tools for studying the brain will improve slower than doubling optimists hope and, for every question we answer, multiple new questions jump in the way of progress.

BCI’s big unsolved problems include probe materials degrading inside the brain, damaging brain tissue when probes are inserted and removed, and probes migrating after they are placed. Understanding the 20-year impacts of a brain implant will take… 20 years! We haven’t figured out how to compress time. And after 20 years we’ll have a whole new set of issues to solve, each needing its own new experiment.

Think again about getting a brain implant to speed up your internet access by 10X. You have no guarantee what the impact on your brain will be 10 years from now. Still interested?

Sometimes, we revisit earlier questions because earlier theories don’t explain what we just found with the latest tools.

But Tech History Says…

So, we’re unlikely to bypass language and get massively high-bandwidth brain-internet connections anytime soon. Nor are we likely to soon have clinics where hopeful parents dial-in their vision of a genetically perfect baby. But throughout history, unexpected breakthroughs happen.

Wilhelm Röntgen accidentally discovered X-rays, now indispensable to medicine, when he noticed a screen glowing in his lab. He even named them “x-rays” because they were a total mystery.

Sir Alexander Fleming famously left a petri dish next to an open window, penicillium mold flew in, and the rest is history. And tens of millions of people are alive today because we have penicillin.

Both of those guys won Nobel Prizes.

So be ready, just in case. Keep a tiny side bet in your future plans, like a dollop of crypto in your portfolio: you or your kids may exchange information with machines and other people 10X faster than today, or your grandkids’ generation will be freed from genetic disorders.

A safe bet is there will be a couple of huge and unexpected opportunities or threats arising from human technological enhancement in our lifetimes. They may not eliminate heritable diseases or radically extend our body’s baseline physical abilities, but solutions will appear with major social and commercial implications.

A Time Traveler From 5023 Suddenly Appears And…

She probably will not look like one of Star Trek’s “Borg.”

The Borg are far too clunky for 3,000 years of enhancement. Besides, Star Trek is set in the 2300s.

Today’s humans are, diet and lifestyle and lots of racial interbreeding aside, pretty much physiologically identical to our global ancestors from 1,000 BCE. But the next 3,000 years of human augmentation will be different.

3,000 years is plenty of time to make spectacular advances in BCIs, genetic editing, and unimaginable materials and enhancements. We can predict nothing more than a time traveler from 5023 will be very well suited to her time’s environment and tastes and preferences, whichever planet she lives on. Unless she’s a misfit runaway who got hold of a time machine.

Enhancing our bodies through technology is an essentially human activity. In the last 125 years, our augmentation know-how has progressed from rudimentary hip replacements and loudspeakers as hearing helpers to talking to computers via thoughts and modifying our genetic makeup to fight disease. What might we create in the next 30 centuries?

My big hope is if a 5023 time traveler pops back to 2023, rather than dropping dead of holy terror we will shout, “Holy crap, you’re amazing! How do we turn us into you?”

What do you think?

Peter, I read this post with deep interest while feeling no effects from yesterday’s bocce ball game on my artificial left hip and artificial right knee. Of course, that’s such a lightweight sport. But then there’s my history: At 46 I played full-court basketball at Stanford’s Roble Gym. One year later pain prevented me from walking across campus for a meeting. I was suffering from the effects of Pertheis disease. Hip replacement surgery changed the entire quality of my life as did the brace I wore from age 6 to 11. So I’m a fervent believer in technology. But I’m also concerned with a lack of development in human values. Like you, none of us can predict where we’re headed. But because we lack the maturity to get along with each other, our species faces a very risky future, made perhaps even riskier by out genius at tool building. Thanks for this great read, Peter. You’re an excellent writer.

Hi, Tim. A regular FutureResolve theme is, “Tech changes fast but people, not so much.” We haven’t yet managed to cause nuclear or bio Armageddon: fingers crossed that reasonable behavior is our nature 🙂 Thanks for the compliment, coming from you that’s huge! BTW, I just added a cartoon to this piece where a family takes a cyber-trip and finds that some things never change.

Here’s another one… What if 5023 time traveler does pop back to 2023 and instead, he/she says “holy crap… Not sure we ought to change that much of the you today, as your inherent imperfections are what make you so perfectly unique! And so we focused on improving the quality of our interactions instead, and are here to show you how to live and let live – coexist and cocreate”

Nice! I’d be blown away, then ask, “How did you solve social media trolling?”